One of the most studied topics in the field of economics is the impact of a per-unit tax when it is applied to one product and not to others. It is well understood that such a tax increases the costs of production for the taxed product thereby leading to an increase in its price. This discourages demand for the taxed product while encouraging the consumption of alternatives.

Through this mechanism, tobacco taxation is intended to reduce the incidence of smoking related disease while generating government revenue, thereby relieving pressure on the public health system and the public purse. The instrumentality of a per-unit tobacco tax, therefore, is to shift some of the public health bill towards the users of tobacco and away from the average non-smoking taxpayer. It is applied to something we all want less of, a tax on a bad rather than a good.

In this way, a tax on tobacco that captures its associated health costs is believed to be an economically efficient tax.

In general, taxes in Australia are not efficient in design. This sentiment would, no doubt, be agreed to by most if not all Australian economists and likely by politicians right across the spectrum.

Most of our taxes are not applied to activities that we would seek to discourage but rather are placed on factors of production – such as labour, capital, land, materials and energy – the employment of which is normally encouraged in the pursuit of economic growth. This is inefficient because in the same way that a tax on tobacco discourages its use, for any given level of final demand a tax on a productive factor reduces a business’ incentive to employ it.

Viewing human caused greenhouse gas (GHG) emissions as a counter-productive bad leads to consideration of a pricing policy similar to that applied to tobacco products. However, unlike tobacco, human caused GHG emissions are always bundled up with some factor of production, in particular fossil fuel based energy. The Federal Government’s proposal to put a price on GHG emissions will raise the purchase price of more polluting fossil energy relative to less polluting energy alternatives.

So what does this mean for economic growth?

Given that this policy will discourage the use of currently low priced factors of production, brown coal for example, at first glance it may appear to represent a drag on employment. However, a GHG emission price will generate substantial government revenue. Such revenue will be directed towards cutting inefficient taxes on low polluting factors of production as a form of compensation for the higher product prices that are likely to result from the policy. In principle, revenue generated by taxing counter-productive bads can be used to lessen the tax burden on highly productive business inputs such as labour and capital.

All else being equal, tax cuts on labour and capital will increase wages and profits. Because labour and capital are factors of production, reductions in their taxation will encourage their greater employment. In isolation, this will have a positive impact on economic growth.

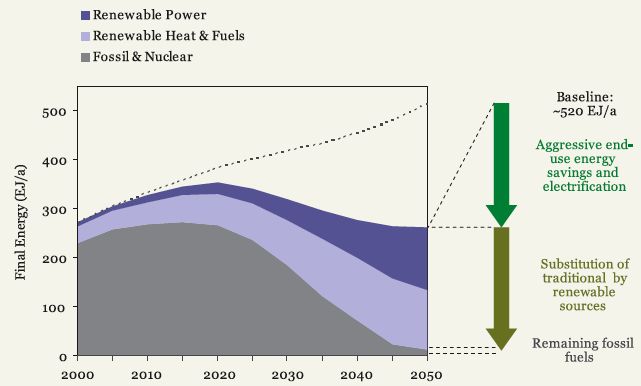

Labour and capital are substitutes for carbon and hydrocarbon based fuels. Less GHG intensive electricity generation, for example, is often attainable through the application of marginally more expensive capital equipment. Similarly, more labour is employed per unit of energy delivered by renewable energy and energy efficiency technologies when compared to fossil fuel based technologies (Wei et al., 2010). In this way, lowering the tax rate on labour and capital will assist in the transition to lower emission systems of production.

A price on GHG emissions will provide not only a disincentive to invest in current high emission technologies but will also incentivise the application of new low emission technologies. Nobel laureate John Hicks (1932) suggested that an increase in the price of one factor of production compared to another would drive innovation aimed at saving the now more expensive input. Empirical evidence on the correlation between energy prices and energy productivity has since supported this hypothesis (e.g. Newell et al., 1999).

Another Nobel Laureate, Robert Solow (1957), found that factor-saving technical change accounted for 87.5 per cent of economic growth per unit of labour in the United States over the period 1909 to 1949. More recent contributions by Lucus (1988), also a Nobel Prize winner, and Romer (1986) placed even greater emphasis on factor productivity as a cause of economic growth.

Kenneth Arrow (1962), yet another Nobel Prize winner, highlighted the importance of learning-by- doing in driving technical change and, by implication, economic growth. Unit cost reductions appear to be more rapid in the early stages of a technology’s deployment, suggesting that from this time forward capital expenditures associated with modern low emission energy transformation processes are likely to become cheaper far more rapidly than the long established high emission technologies, provided they are encouraged to enter the market to enable such learning to occur. Arrow (2007, p .5) suggests that “Since energy-saving reduces energy costs…” we should not be surprised if the gross effect of GHG mitigation policy over the coming decades will be to promote economic growth rather than to suppress it.

All of this suggests that economic growth concerns provide little or no justification to oppose a policy of tax cuts funded by a GHG emission price. All the evidence suggests this is a low cost means to the bipartisan end of precautionary GHG mitigation. Even if one were tenacious in a belief that the mainstream climate science is seriously flawed (i.e. that the climate change is minor or that it is not strongly influenced by economic activity) a rejection of the low cost domestic policy response described above would not follow naturally from such a belief, unless one were to also reject the mainstream economic science. Putting a tax on something we don’t want and cutting taxes on things we do is probably good economic policy.

References

Arrow, K. J. (1962). The Economic implications of learning by doing. The Review of Economic Studies, 29(3), 155-173.

Arrow, K. J. (2007). Global climate change: A challenge to policy. The Economists’ Voice, 4(3), Article 2, 1-5.

Hicks, J. R. (1932). The Theory of Wages, London: Macmillan.

Lucas, R. E. (1988). On the mechanics of economic development. Journal of Monetary Economics, 22(1), 3–42.

Newell, R. G., Jaffe, A. B., & Stavins, R. N. (1999). The induced innovation hypothesis and energy-saving technological change. The Quarterly Journal of Economics, 114(3), 941-975.

Romer, P. M. (1986). Increasing returns and long run growth. Journal of Political Economy, 94(5), 1002-1037.

Solow, R. M. (1957). Technical change and the aggregate production function. The Review of Economics and Statistics, 39(3), 312-320.

Wei, M., Patadia, S., & Kammen, D. M. (2010). Putting renewable and energy efficiency to work: How many jobs can the clean energy industry generate in the US? Energy Policy, 38, 919-931.